Common Mistakes in Bounding Box Annotation and How to Fix Them

Introduction

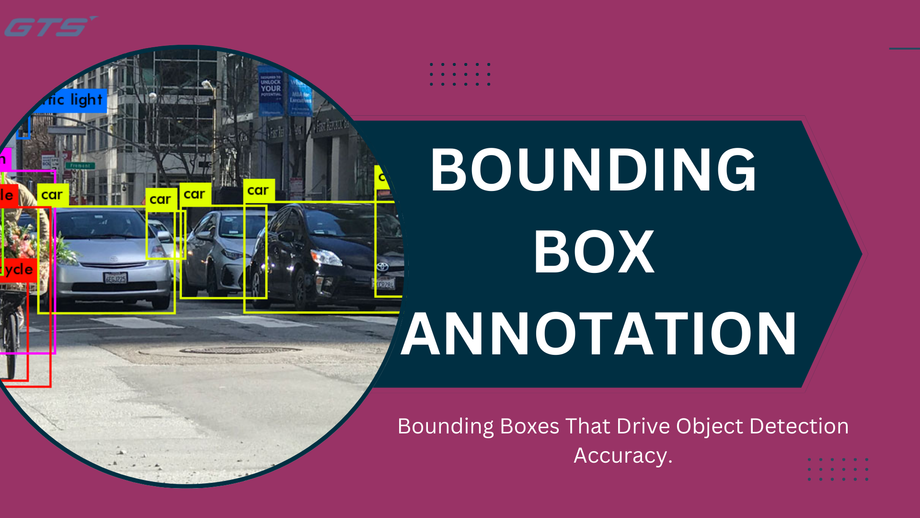

Bounding Box Annotation is essential for training computer vision models focused on object detection. The precision of these annotations significantly influences the accuracy of the model, as even minor inaccuracies can mislead the model and result in suboptimal predictions. Although the task may appear simple—merely drawing a box around an object—errors can easily occur, potentially compromising the model's effectiveness.

In this article, we will examine the most prevalent errors in bounding box annotation and offer practical solutions to enhance your dataset and boost your model's accuracy.

The Importance of Bounding Box Annotation

Bounding box annotation serves as the foundation for object detection models. A well-annotated dataset allows the model to comprehend:

- Object Location: The position of the object within the image.

- Object Size and Shape: The object's dimensions.

- Object Class: The category of the object (e.g., vehicle, individual, animal).

- Inaccurate bounding box annotations can hinder the model's ability to detect objects correctly, resulting in false positives, overlooked detections, and diminished overall performance.

Frequent Errors and Their Solutions

- Inconsistent Box Placement

Error:

- Misaligned boxes or differing sizes for the same category of objects.

- Some boxes may be excessively tight, while others are overly loose, resulting in inconsistent training signals.

- Solution:

- Develop clear annotation standards to maintain uniformity.

- Utilize automated annotation tools to standardize box sizes.

- Implement a quality control process to assess box placement prior to finalizing the dataset.

- Inadequate Labeling of Occluded Objects

Error:

- Neglecting to annotate objects that are partially obscured by other items.

- Only marking the visible portion instead of the entire object.

- Solution:

- Annotate occluded objects with a complete bounding box, even if some parts are concealed.

- Consider using segmentation-based annotation for intricate occlusions when necessary.

- Train the model to accommodate partial visibility by employing mixed data.

3. Overlapping and Duplicate Bounding Boxes

Error:

Establishing multiple bounding boxes for a single object.

Creating overlapping boxes that create confusion for the model.

Solution:

- Implement non-maximum suppression (NMS) to eliminate overlapping boxes during the preprocessing stage.

- Establish automated systems to identify and remove duplicate entries.

- Utilize Intersection over Union (IOU) metrics to consolidate similar bounding boxes.

Misclassification of Objects

Error:

Assigning an incorrect class label to an object.

Mistaking similar objects for one another (e.g., distinguishing between a cat and a dog).

Solution:

- Adopt a hierarchical labeling approach for closely related objects.

- Incorporate AI-assisted labeling to minimize human mistakes.

- Introduce a secondary manual review process conducted by a subject matter expert.

4. Inaccurate Box Dimensions and Coverage

Error:

- Bounding boxes that are excessively tight, resulting in the truncation of object parts.

- Boxes that are overly loose, capturing background or irrelevant elements.

- Solution:

- Adhere to a consistent padding guideline (e.g., 5–10% around the object).

- Employ automated edge detection techniques to enhance box boundaries.

- Utilize data augmentation methods to accommodate variations in object size.

Class Imbalance in the Dataset

Error:

An excessive number of annotations for certain objects while others are insufficiently represented.

This leads to biased training, causing the model to perform better on the more prevalent classes.

Solution:

- Achieve dataset balance by increasing the number of samples for underrepresented classes.

- Utilize synthetic data generation to enhance the representation of rare classes.

- Implement class weighting during the training of the model.

Overlooking Background and Context

Error:

Focusing solely on annotating objects while neglecting the surrounding context.

This oversight leads to difficulties for the model in differentiating objects from intricate backgrounds.

Solution:

Incorporate context-aware annotations.

Annotate the surrounding environment to assist the model in distinguishing between foreground and background elements.

Utilize multi-label annotations for scenes with complexity.

- Neglecting Scale and Perspective

Error:

Applying uniform annotation methods to objects of different sizes.

This approach hampers the model's ability to identify smaller or distant objects.

Solution:

- Implement multi-scale training datasets.

- Introduce augmentation techniques that allow for zooming in and out.

- Employ a scale-normalization method during the preprocessing phase.

Recommended Practices for High-Quality Bounding Box Annotation.

To prevent these errors, adhere to the following best practices:

- Establish Clear Annotation Guidelines – Ensure that all team members adhere to a uniform labeling approach.

- Combine Automation with Human Oversight – Utilize AI-driven annotation tools, but always have human specialists validate the labels. Track Model Performance – Examine misclassified objects and adjust your dataset as needed.

- Conduct Quality Assessments – Regularly inspect the dataset for discrepancies and inaccuracies.

- Continuously Refine – Dataset enhancement is a perpetual endeavor—keep updating as the model progresses.

Concluding Remarks

While bounding box annotation may appear straightforward, minor errors can greatly impact model accuracy and effectiveness. By recognizing and rectifying these prevalent issues, you can develop a clean and consistent dataset that enhances the performance of object detection models. Interested in elevating the quality of your dataset? Discover more about our bounding box annotation services and how our skilled team can assist you in creating precise and high-quality datasets for your Globose Technology Solutions AI initiatives.